Infra Play #129: Earnings season Q1'26

On the Duality of Success

It’s been a rough week, in a rough month, in a rough year for SaaS stock prices. The reality in the trenches is of course quite different, as top performers in the leading cloud infrastructure companies continue to sell record deals and many customers are reshaping their companies through cloud+data+AI.

I’ve traditionally taken a deep dive on a quarterly basis for each hyperscaler, but due to Alphabet having their earnings next week, and the significant importance of the hardware layer, we’ll do a slightly different format this time and instead take a look at Microsoft, SAP and ASML.

This should give us a broader feeling for how demand is shifting between hardware, cloud infrastructure and the enterprise application layer.

The key takeaway

For tech sales and industry operators: SaaS earnings season has started and it's been a bloodbath. Deals have never been bigger, AI adoption is accelerating across every layer of the stack, and yet that's no longer enough. Microsoft delivered the best enterprise sales execution in the industry and was rewarded with the second largest stock selloff in tech history. When $357B in value evaporates in a day, expect second order effects: tighter budget scrutiny, frozen hiring plans, and heightened risk aversion across your entire customer base.

For investors and founders: The only defensible position in this market is vertical integration from silicon to application, everyone else is building on rented land. The agent paradigm doesn't augment workflows, it replaces them entirely, and incumbents can't cannibalize their own seat-based revenue models fast enough to adapt. The opportunity isn't 'infusing agents into workflows' as SAP describes; it's building architectures that assume AI-native operation from day one. The death of SaaS narrative is premature in timing but directionally correct. What we're seeing this earnings cycle is a valuation correction, not the disruption itself (that comes later, aggressively).

The week of pain

Satya Nadella: This quarter, the Microsoft Cloud surpassed $50 billion in revenue for the first time, up 26% year-over-year, reflecting the strength of our platform and accelerating demand.

We are in the beginning phases of AI diffusion and its broad GDP impact.

Our TAM will grow substantially across every layer of the tech stack as this diffusion accelerates and spreads.

In fact, even in these early innings, we have built an AI business that is larger than some of our biggest franchises that took decades to build.

Today, I will focus my remarks across the three layers of our stack: Cloud & Token Factory, Agent Platform, and High Value Agentic Experiences.

When it comes to our Cloud & Token Factory, the key to long term competitiveness is shaping our infrastructure to support new high-scale workloads.

We are building this infrastructure out for the heterogenous and distributed nature of these workloads, ensuring the right fit with the geographic and segment-specific needs for all customers, including the long tail.

The key metric we are optimizing for is tokens per watt per dollar, which comes down to increasing utilization and decreasing TCO using silicon, systems, and software.

A good example of this is the 50% increase in throughput we were able to achieve in one of our highest volume workloads – OpenAI inferencing powering our Copilots.

Another example was the unlocking of new capabilities and efficiencies for our Fairwater datacenters.

In this instance, we connected both our Atlanta and Wisconsin sites through AI WAN to build a first-of-its-kind AI superfactory.

Fairwater’s two-story design and liquid cooling allow us to run higher GPU densities and thereby improve both performance and latencies for high-scale training.

All up, we added nearly one gigawatt of total capacity this quarter alone.

At the silicon layer, we have NVIDIA and AMD, and our own Maia chips, delivering the best all-up fleet performance, cost, and supply across multiple generations of hardware.

Earlier this week, we brought online our Maia 200 accelerator.

Maia 200 delivers 10+ petaFLOPS at FP4 precision with over 30% improved TCO, compared to the latest generation hardware in our fleet.

We will be scaling this starting with inferencing and synthetic data gen for our superintelligence team, as well as doing inferencing for Copilot and Foundry.

And given AI workloads are not just about AI accelerators, but also consume large amounts of compute, we are pleased with the progress we are making on the CPU side as well.

Cobalt 200 is another big leap forward, delivering over 50% higher performance compared to our first custom-built processor for cloud-native workloads.

Sovereignty is increasingly top of mind for customers, and we are expanding our solutions and global footprint to match.

We announced DC investments in seven countries this quarter alone, supporting local data residency needs.

And we offer the most comprehensive set of sovereignty solutions across public, private, and national partner clouds, so customers can choose the right approach for each workload, with the local control they require.

I think that it's telling that we open up with two themes, the accelerated growth for Azure and the effort to keep adding additional capacity, ideally in a more efficient form. This will of course come back to bite Microsoft later, as Satya had to admit that Azure's growth was stunted due to lack of sufficient infrastructure. I've covered this previously as "Satya's flinch."

Satya Nadella: Next, I want to talk about the agent platform.

Like in every platform shift, all software is being rewritten. A new app platform is born.

You can think of agents as the new Apps.

And to build, deploy, and manage agents, customers will need a model catalog, tuning services, harnesses for orchestration, services for context engineering, AI safety, management, observability, and security.

It starts with having broad model choice.

Our customers expect to use multiple models as part of any workload that they can fine-tune and optimize based on cost, latency, and performance requirements.

And we offer the broadest selection of models of any hyperscaler.

This quarter, we added support for GPT 5.2, as well as Claude 4.5.

Already, over 1,500 customers have used both Anthropic and OpenAI models on Foundry.

We are seeing increasing demand for region-specific models, including Mistral and Cohere, as more customers look for sovereign AI choices.

And we continue to invest in our first-party models, which are optimized to address the highest value customer scenarios, such as productivity, coding, and security.

As part of Foundry, we also give customers the ability to customize and finetune models.

Increasingly, customers want to be able to capture the tacit knowledge they possess inside of model weights as their core IP.

This is probably the most important sovereign consideration for firms as AI diffuses more broadly across our GDP and every firm needs to protect their enterprise value.

For agents to be effective, they need to be grounded in enterprise data and knowledge.

That means connecting their agents to systems of record and operational data, analytical data, as well as semi-structured and unstructured productivity and communications data.

And this is what we are doing with our unified IQ layer, spanning Fabric, Foundry, and the data powering Microsoft 365.

In a world of context engineering, Foundry Knowledge and Fabric are gaining momentum.

Foundry Knowledge delivers better context with automated source routing and advanced agentic retrieval, while respecting user permissions.

And Fabric brings together end-to-end operational, real-time, and analytical data.

Two years since it became broadly available, Fabric’s annual revenue run rate is now over two billion dollars, with over 31,000 customers.

And it continues to be the fastest growing analytics platform on the market, with revenue up 60% year-over-year.

All-up, the number of customers spending one million dollars-plus per quarter on Foundry grew nearly 80%, driven by strong growth in every industry.

And over 250 customers are on track to process over one trillion tokens on Foundry this year.

There are many great examples of customers using all of this capability on Foundry to build their own agentic systems.

Alaska Airlines is creating natural language flight search.

BMW is speeding up design cycles.

Land O’Lakes is enabling precision farming for co-op members.

And Symphony.AI is addressing bottle necks in the CPG industry.

And of course Foundry remains a powerful on-ramp for our entire cloud.

The vast majority of Foundry customers use additional Azure solutions like developer services, app services, databases, as they scale.

Beyond Fabric and Foundry, we are also addressing agent building by knowledge workers with Copilot Studio and Agent Builder.

Over 80% of the Fortune 500 have active agents built using these low-code/no-code tools.

As agents proliferate, every customer will need new ways to deploy, manage, and protect them.

We believe this creates a major new category and significant growth opportunity for us.

This quarter, we introduced Agent 365, which makes it easy for organizations to extend their existing governance, identity, security, and management to agents.

That means the same controls they already use across Microsoft 365 and Azure now extend to agents they build and deploy on our cloud or any other cloud.

And partners like Adobe, Databricks, Genspark, Glean, NVIDIA, SAP, ServiceNow, and Workday are already integrating Agent 365.

We are the first provider to offer this type of agent “control plane” across clouds.

I don’t know whether I would refer to agents as “the new apps” since they can have significantly broader scope than anything we are used to when it comes to applications, as well as have a very different UX. To call them “apps” is more of a disservice to them, but Satya has to convince the analysts (who are about to dump his stock) that Microsoft is in the center of this shift.

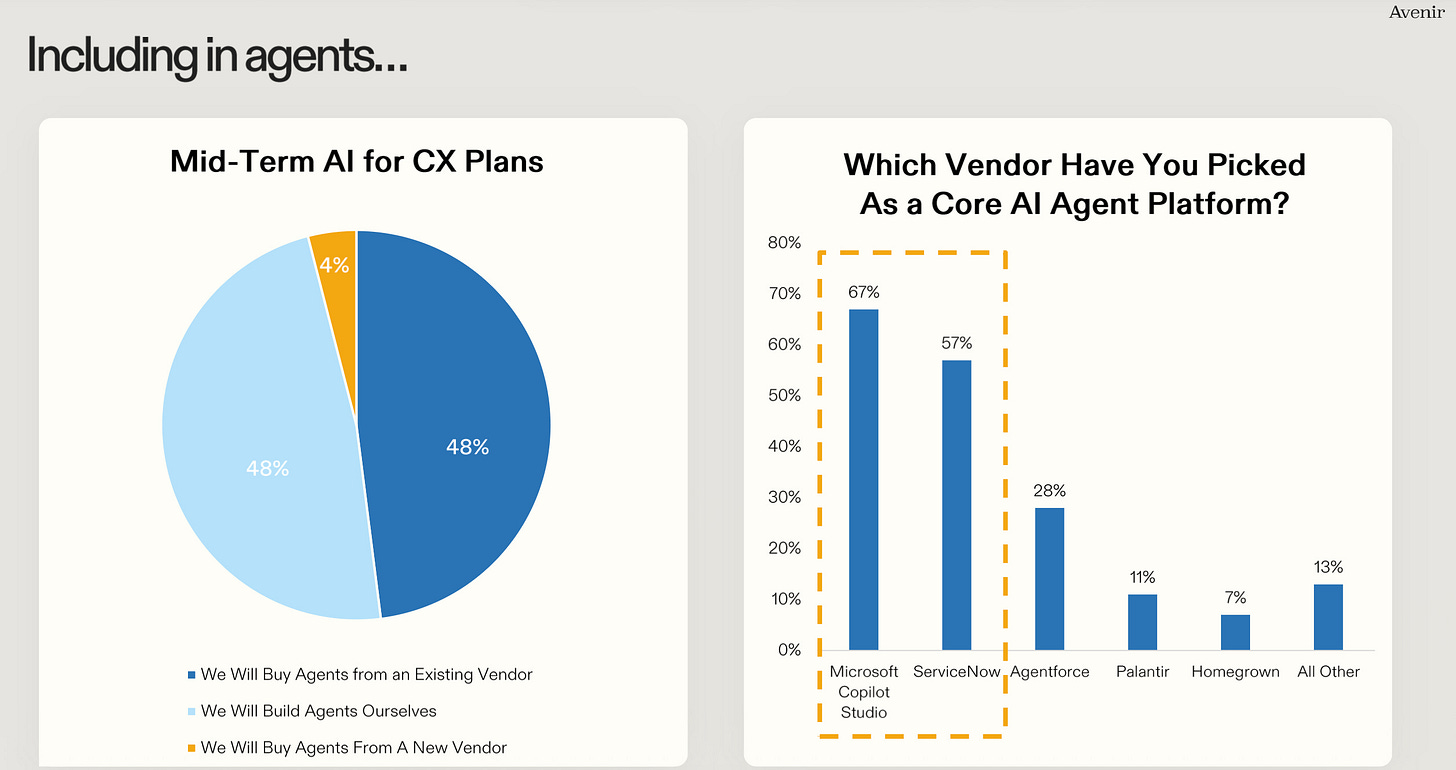

And in the center they are. In my “Is SaaS dead?” article, I covered this suspicious chart:

The reason why I found this rather confusing is because the majority of agents are being deployed in the hyperscaler platforms for developers, not the "premade" tooling. By the way, when I say that the biggest challenge for software companies is competing with the hyperscalers, Fabric is a poster child of this dynamic. In two years, the product has reached $2B ARR. It's literally one of the most successful analytics products ever made, and we barely even mention it. It's just another massive line item of growth in the Azure portfolio. If you had a startup funded by VCs doing those figures, you would be hearing about them in every single podcast as one of the biggest success stories to come out of Silicon Valley.

Satya Nadella: Now, let us turn to the high value agentic experiences we are building.

AI experiences are intent-driven and are beginning to work at task-scope.

We are entering an age of “macro delegation and micro steering” across domains.

Intelligence using multiple models is built into multiple form factors.

You see this in chat, in new agent inbox apps, “coworker” scaffoldings, agent workflows embedded in applications and IDEs that are used every day, or even in in your command line with file system access and skills.

That is the approach we are taking with our first-party family of Copilots, spanning key domains.

In consumer, for example, Copilot experiences span chat, news feed, search, creation, browsing, shopping, and integrations into the operating system.

And it is gaining momentum.

Daily users of our Copilot app increased nearly 3X year-over-year.

And with Copilot Checkout, we have partnered with PayPal, Shopify, and Stripe so customers can make purchases directly within the app.

With Microsoft 365 Copilot, we are focused on organization-wide productivity.

Work IQ takes the data underneath Microsoft 365 and creates the most valuable stateful agent for every organization.

It delivers powerful reasoning capabilities over people, their roles, their artifacts, their communications, and their history and memory – all within an organization’s security boundary.

Microsoft 365 Copilot’s accuracy and latency powered by Work IQ is unmatched, delivering faster and more accurate work-grounded results than competition.

And we have seen our biggest quarter-over-quarter improvement in response quality to date.

This has driven record usage intensity, with the average number of conversations per user doubling year-over-year.

Microsoft 365 Copilot also is becoming a true daily habit, with daily active users increasing 10X year-over-year.

We are also seeing strong momentum with Researcher agent which supports both OpenAI and Claude, as well as Agent Mode in Excel, PowerPoint, and Word.

All up, it was a record quarter for Microsoft 365 Copilot seat adds, up over 160% year-over-year.

We saw accelerating seat growth quarter-over-quarter and now have 15 million paid Microsoft 365 Copilot seats, and multiples more enterprise Chat users.

And we are seeing larger commercial deployments.

The number of customers with over 35,000 seats tripled year-over-year.

Fiserv, ING, NASA, University of Kentucky, University of Manchester, US Department of Interior, and Westpac, all purchased over 35,000 seats.

Publicis alone purchased over 95,000 seats for nearly all its employees.

We are also taking share in Dynamics 365 with built-in agents across the entire suite.

A great example of this is how Visa is turning customer conversation data into knowledge articles with our Customer Knowledge Management Agent in Dynamics.

And how Sandvik is using our Sales Qualification Agent to automate lead qualification across tens of thousands of potential customers.

In coding, we are seeing strong growth across all paid GitHub Copilot.

Copilot Pro+ subs for individual devs increased 77% quarter-over-quarter.

And, all up now we have over 4.7 million paid Copilot subscribers, up 75% year-over-year.

Siemens, for example is going all in on GitHub, adopting the full platform to increase developer productivity, after a successful Copilot rollout to 30,000 of its developers.

GitHub Agent HQ is the organizing layer for all coding agents, like Anthropic, OpenAI, Google, Cognition, and xAI, in the context of customers’ GitHub repos.

With Copilot CLI and VS Code, we offer developers the full spectrum of form factors and models they need for AI-first coding workflows.

And when you add Work IQ as a skill or an MCP to our developer workflow, it is a game changer, surfacing work context like emails, meetings, docs, projects, messages, and more.

You can simply ask the agent to plan and execute changes to your code based on an update to a spec in SharePoint, or using the transcript of your last engineering and design meeting in Teams!

And we are going beyond that with the GitHub Copilot SDK.

Developers can now embed the same runtime behind Copilot CLI—multi-model, multi-step planning, tools, MCP integration, auth, streaming—directly into their applications.

In security, we added a dozen new and updated Security Copilot agents across Defender, Entra, Intune, and Purview.

For example, Icertis’ SOC team used Security Copilot agents to reduce manual triage time by 75%, which is a real game-changer in an industry facing a severe talent shortage.

To make it easier for security teams to onboard, we are rolling out Security Copilot to all E5 customers.

And our security solutions are also becoming essential to manage organizations’ AI deployments.

24 billion Copilot interactions were audited by Purview this quarter, up 9X year-over-year.

Finally, I want to talk about two additional high impact agentic experiences.

First, in healthcare, Dragon Copilot is the leader in its category, helping over one hundred thousand medical providers automate their workflows.

Mount Sinai Health is now moving to a system-wide Dragon Copilot deployment for providers after a successful trial with its primary care physicians.

All up, we helped document 21 million patient encounters this quarter, up 3X year-over-year.

And, second, when it comes to science and engineering, companies like Unilever in consumer goods and Synopsys in EDA are using Microsoft Discovery to orchestrate specialized agents for R&D end to end.

They are able to reason over scientific literature and internal knowledge, formulate hypotheses, spin up simulations, and continuously iterate to drive new discoveries.

The biggest challenge with the “Copilot is winning” story ties back to the fact that they’ve been renaming everything under the brand. The goal is rather obvious: give a bite-sized overview of the holistic adoption of seat-based tools across their customer base, essentially as a parallel metric next to Azure’s ARR growth which is predominantly usage-driven.

The reality is that the brand is being perceived very negatively in the consumer space, has been underperforming in its original Enterprise designation as GitHub Copilot, and the introduction of essentially the full Microsoft suite of tools under it is just making it sound a lot less credible than it should.

Brent Thill: Amy, on 45% of the backlog being related to OpenAI, I’m just curious if you can comment. There’s obviously concern about the durability. And I know maybe there’s not much you can say on this, but I think everyone’s concerned about the exposure, and if you could maybe talk through your perspective and what both you and Satya are seeing.

Amy Hood: I think maybe I would have thought about the question quite differently, Brent. The first thing to focus on is the reason we talked about that number is because 55%, or roughly $350 billion, is related to the breadth of our portfolio, a breadth of customers across solutions, across Azure, across industries, across geographies.

That is a significant RPO balance, larger than most peers, more diversified than most peers. And frankly, I think we have super high confidence in it. And when you think about that portion alone growing 28%, it’s really impressive work on the breadth as well as the adoption curve that we’re seeing, which is, I think what I get asked most frequently. It’s grown by customer segment, by industry and by geo. And so, it’s very consistent.

And so, then if you’re asking about how do I feel about OpenAI and the contract and the health, listen, it’s a great partnership. We continue to be their provider of scale. We’re excited to do that. We sit under one of the most successful businesses built, and we continue to feel quite good about that. It’s allowed us to remain a leader in terms of what we’re building and being on the cutting edge of app innovation.

The three main bearish arguments that the analysts used to dump the stock were essentially "you keep spending too much CAPEX on AI, 55% of your backlog is with OpenAI, and you can't even service everything."

Keith Weiss: Excellent. Thank you guys for taking the question.

I’m looking at a Microsoft print where earnings is growing 24% year on year, which is a spectacular result. Great execution on your part, top line growing well, margins expanding, but I’m looking at after-hours trading, and the stock is still down.

And I think one of the core issues that is weighing on investors is CapEx is growing faster than we expected, and maybe Azure is growing a little bit slower than we expected. And I think that fundamentally comes down to a concern on the ROI on this CapEx spend over time.

I was hoping you guys could help us fill in some of the blanks a little bit in terms of how should we think about capacity expansion and what that can yield in terms of Azure growth, going forward. But more to the point, how should we think about the ROI on this investment as it comes to fruition? Thanks, guys.

Amy Hood: I think the first thing I think you really asked a very direct correlation that I do think many investors are doing, which is between the CapEx spend and seeing an Azure revenue number. And we tried last quarter, and I think again this quarter, to talk more specifically about all the places that the CapEx spend, especially the short-lived CapEx spend across CPU and GPU, and where that will show up.

Sometimes, I think it’s probably better to think about the Azure guidance that we give as an allocated capacity guide about what we can deliver in Azure revenue, because as we spend the capital and put GPUs, specifically, it applies to CPUs, but GPUs more specifically, we’re really making long-term decisions.

And the first thing we’re doing is solving for the increased usage in sales and the accelerating pace of M365 Copilot, as well as GitHub Copilot, our first-party apps. Then we make sure we’re investing in the long-term nature of R&D and product innovation. And much of the acceleration that I think you’ve seen from us in products over the past bit is coming because we are allocating GPUs and capacity to many of the talented AI people we’ve been hiring over the past years.

Then when you end up, is that you end up with the remainder going towards serving the Azure capacity that continues to grow in terms of demand. And a way to think about it, because I think I get asked this question sometimes, is if I had taken the GPUs that just came online in Q1 and Q2, in terms of GPUs, and allocated them all to Azure, the KPI would have been over 40.

I think this is where the "duality of success" comes into play. Azure grew 39% YoY, and they funneled as much as possible from the 1GW of new compute that was activated this quarter to that demand. If they had given all of the compute, the growth would likely end up a couple of percentage points higher. The fact that they are still trying to invest in the clearly critical infrastructure for other long-term plays is good, but it was not perceived that way. Together with announcing slightly lower growth expectations for next quarter (37% to 38%), well…

This became the second-largest sell-off in history at $357B capital value lost.

If Redmond is, well, red, let’s see how things are going in Walldorf.