Why behind AI: End of year shopping spree

Groq and Manus AI "join forces" with bigger players

Never a dull moment in cloud infrastructure software. At a time when regular corporate activity is dead, frontier labs keep releasing models and large players are trying to get deals done.

The two big acquisitions come from two opposite angles, one is a specialized hardware company in fast inference (with a cloud service on top) and the other is agentic scaffolding on top of Claude to conduct business process tasks, or as the kids call it these days, the application layer.

Today, Groq announced that it has entered into a non-exclusive licensing agreement with Nvidia for Groq’s inference technology. The agreement reflects a shared focus on expanding access to high-performance, low cost inference.

As part of this agreement, Jonathan Ross, Groq’s Founder, Sunny Madra, Groq’s President, and other members of the Groq team will join Nvidia to help advance and scale the licensed technology.

Groq will continue to operate as an independent company with Simon Edwards stepping into the role of Chief Executive Officer.

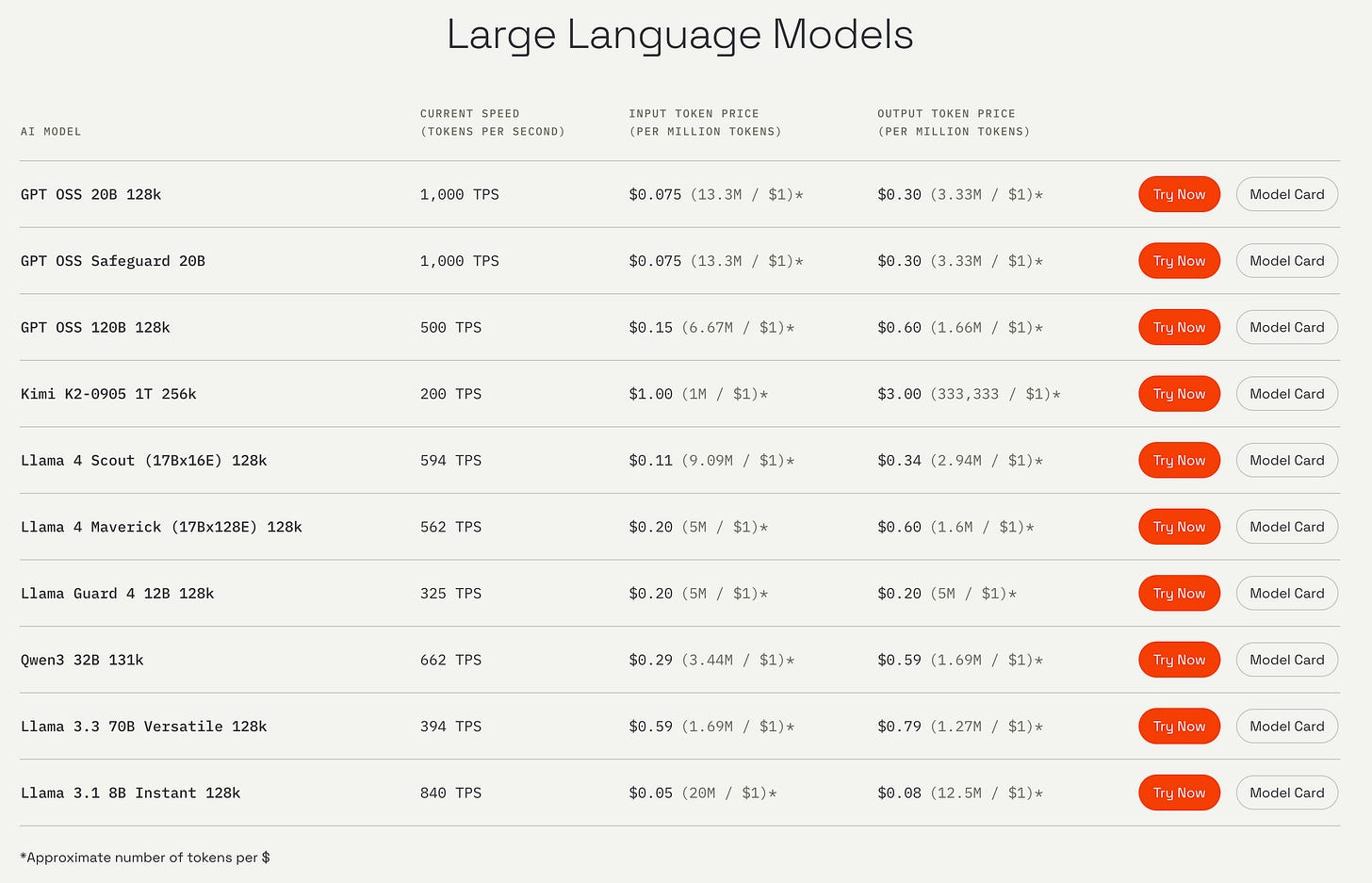

GroqCloud will continue to operate without interruption.

The fun wording of "non-exclusive licensing agreement" roughly translates into an acquihire. Similar to the Windsurf and Scale AI acquisitions, rather than trying to conduct a buyout and go through regulatory scrutiny, NVIDIA is picking 92% of the employees and the relevant technology for $20B, while the remaining staff will run GroqCloud as a service.

GroqCloud offers multiple open-source models, hosted at a very cheap price and with exceptionally high token per second inference. The issue of course is that outside of Kimi K-2, none of these are actually frontier model quality. The difficulty of getting GroqCloud to scale, together with finding sufficient buyers for their chips led to Groq revising their revenue projection for the year from $2B revenue down to $500M. Barely $40M of that is ARR from GroqCloud.

The move is a reflection of both the opportunity and difficulty of delivering specialized chips on the market, as all of the investors are getting rewarded for getting this far in the game, but arguably 2025 demonstrated that open-source models have a very narrow window of opportunity and the hardware that benefits the most from supporting those models is being impacted. Some additional reporting from The Information:

Huang appears to have recognized the growing demand for more specialized chips for inference workloads, or handling applications running AI models. Nvidia in September released a specialized chip, the Rubin CPX, aimed at handling such workloads better than its other chips. However, the chip was still based on its more general purpose graphics processing units rather than the more specialized chips its competitors including Groq are designing.

“Groq’s first-generation chips were not competitive [with Nvidia’s chips], but there are two [more] generations coming back-to-back soon,” said Dylan Patel, chief analyst at chip consultancy SemiAnalysis. “Nvidia likely saw something they were scared of in those.”

Nvidia faces additional competition from Google’s TPUs that can be used for developing AI models as well as inference workloads. Major companies such as Apple have used TPUs rather than Nvidia GPUs to train their largest AI models, and Anthropic has also become a major TPU buyer.

Other large customers of Nvidia chips, including Meta and OpenAI, are also working on their own specialized inference chips for running AI models as a way to reduce the stranglehold Nvidia has on their technologies.

Challenging Nvidia directly has been difficult for other startups besides Groq. Such startups have increasingly sought to be acquired. Intel, for instance, is in advanced talks to acquire AI chip startup SambaNova and the deal could be announced as soon as next month, according to a person with knowledge of the discussions. And in October, Meta acquired AI chip startup Rivos to boost its internal chip development. In June, Advanced Micro Devices hired the staff behind Untether AI, which also develops chips for running AI models.

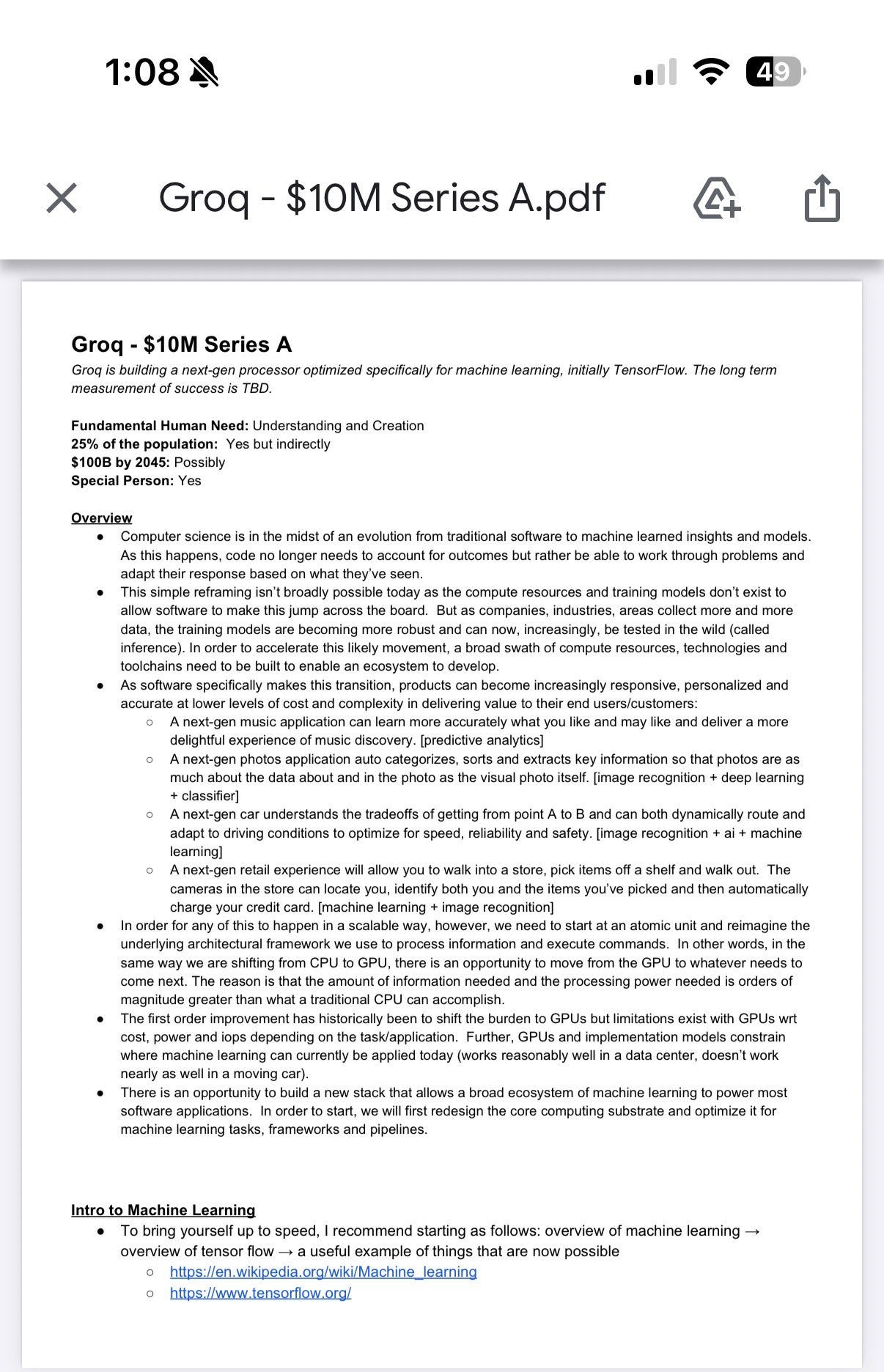

Speaking of the investor side of the deal, Chamath actually shared his investor memo at the time, which is a good window into what the thinking and pitch looked like in 2016:

Jonathan Ross moving to NVIDIA is the biggest story out of this and it will have long-term implications in the industry. As frontier labs drive the biggest innovation on the intelligence and reasoning side, leveraging the existing NVIDIA designs for model training and improving inference with chips optimized for speed and efficiency with Groq patents is a very exciting development. It's also one that Jensen clearly wanted to get done without getting stuck in regulatory hell for a year, hence the acquihire.

On the other side of the equation is the Meta acquisition of Manus AI.

The news is out, and it’s big: Manus is joining Meta.

This announcement is more than just a headline—it’s validation of our pioneering work with General AI Agents.

Since the launch, Manus has focused on building a general-purpose AI agent designed to help users tackle research, automation, and complex tasks. Through continuous product iteration, we’ve been working hard to make these capabilities more reliable and useful across a growing range of real-world use cases. In just a few months, our agent has processed more than 147 Trillion tokens and powered the creation of over 80 Million virtual computers.

We believe in the potential of autonomous agents, and this development reinforces Manus’s role as an execution layer — turning advanced AI capabilities into scalable, reliable systems that can carry out end-to-end work in real-world settings.

Our top priority is ensuring that this change won’t be disruptive for our customers. We will continue to sell and operate our product subscription service through our app and website. The company will continue to operate from Singapore.

Our solution is driving value for millions of users worldwide today. With time, we hope to expand this subscription to the millions of businesses and billions of people on Meta’s platforms.

“Joining Meta allows us to build on a stronger, more sustainable foundation without changing how Manus works or how decisions are made,” said Xiao Hong, CEO of Manus. “We’re excited about what the future holds with Meta and Manus working together and we will continue to iterate the product and serve users that have defined Manus from the beginning.”

Unironically written by Claude (but I’m sure prompted in Manus AI), the announcement glosses over several interesting elements.

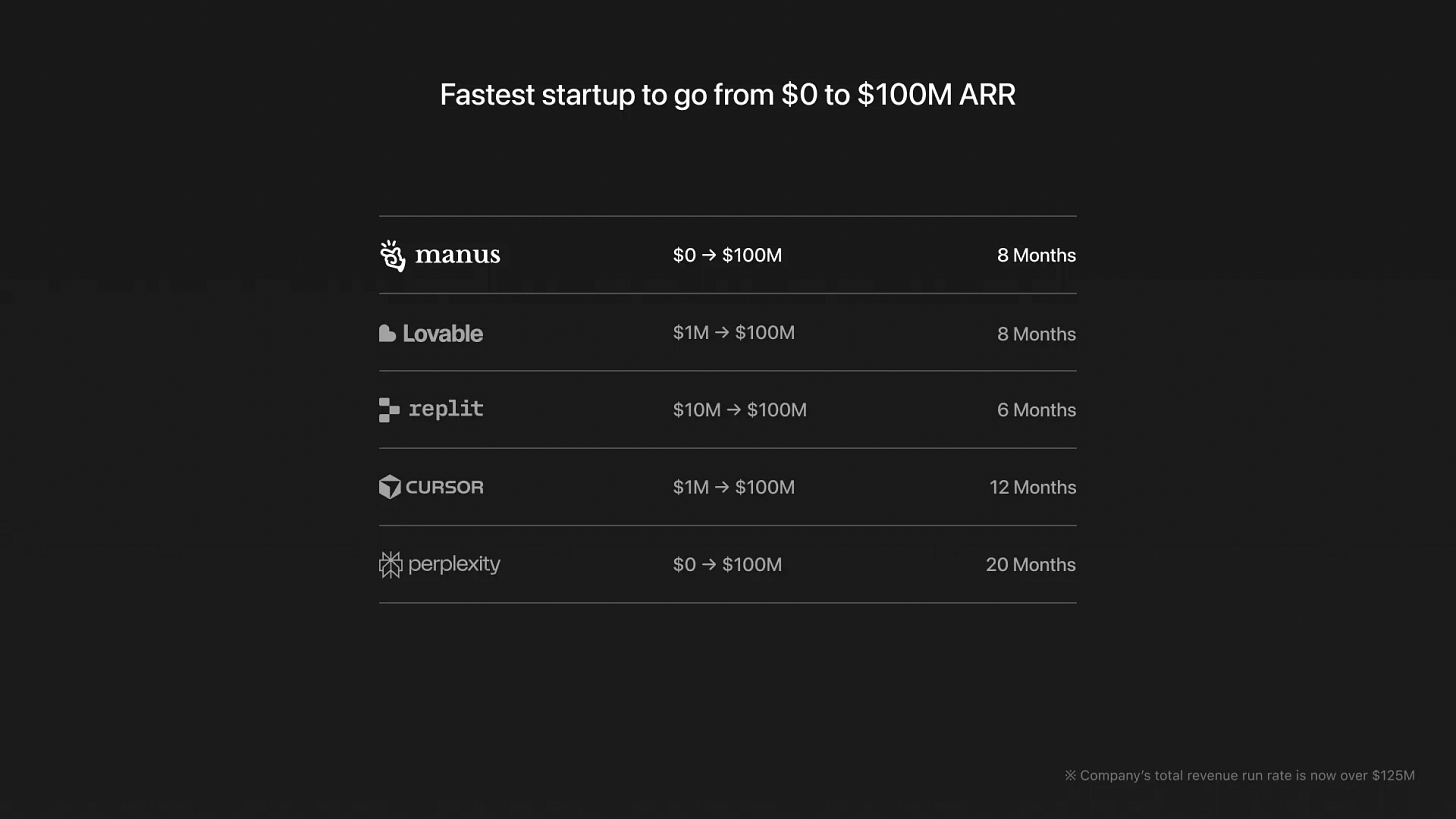

Two weeks ago, Manus AI actually had a different announcement, highlighting that they've reached $100M ARR. The leadership team is a good example of somebody who took monetizing the western market very seriously. Initially they launched as a Chinese startup called Butterfly Effect. They launched a "GPT for browsers" plugin called Monica, before later moving on to Manus AI as their flagship product. Focused on expanding quickly, they raised a $75M Series B with Benchmark (but with Tencent remaining in the game as the original investor), laid off their Chinese operations and positioned Manus as a leading product in its niche.

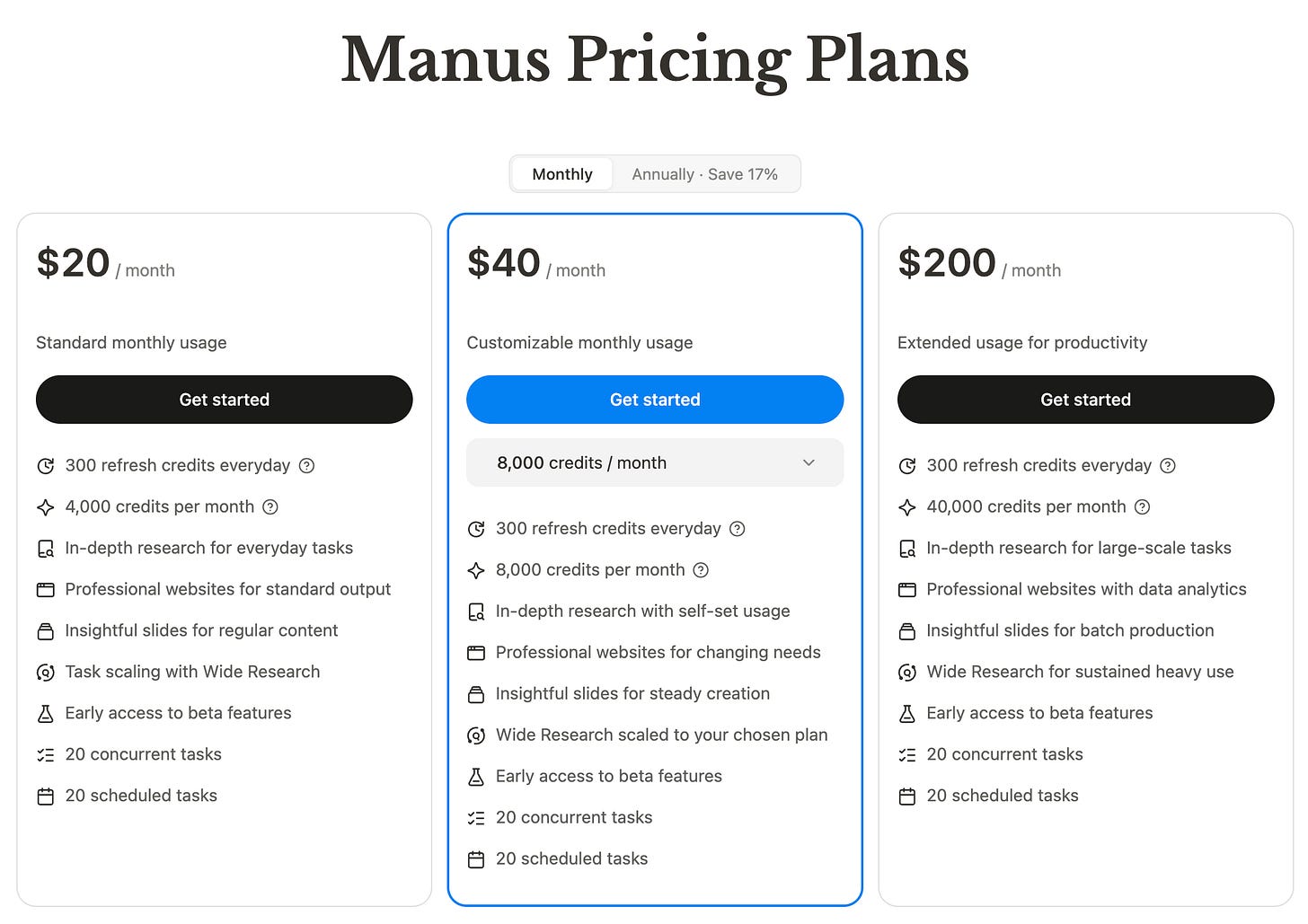

While also leveraging some open source models for specific tasks, Manus was mostly built on top of Claude models and benefited from Anthropic's pivotal year, as well as the inability of the frontier model lab to actually deliver a front end that makes sense. While the models that Anthropic is delivering to the market can do all of these activities, the actual output users would get from using the Claude apps on either mobile or web was poor.

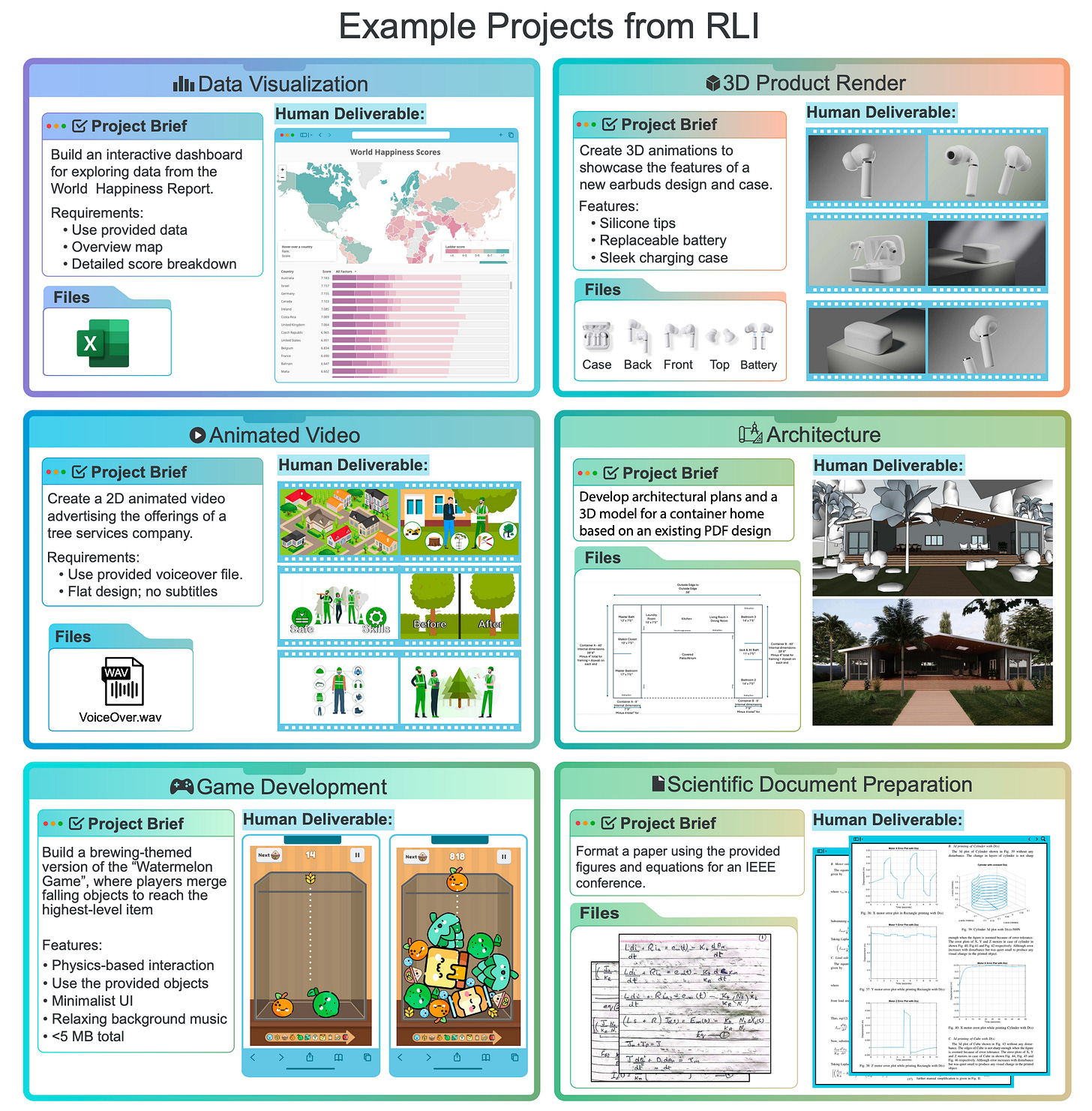

What Manus was able to do was deliver solid scaffolding that made the models usable in the business process tasks that many users wanted to leverage them for. Funnily enough, the biggest recognition outside of user adoption came from a rather bearish paper on “Remote Labor Index”, a study that ScaleAI (who were acquihired by Meta) collaborated on.

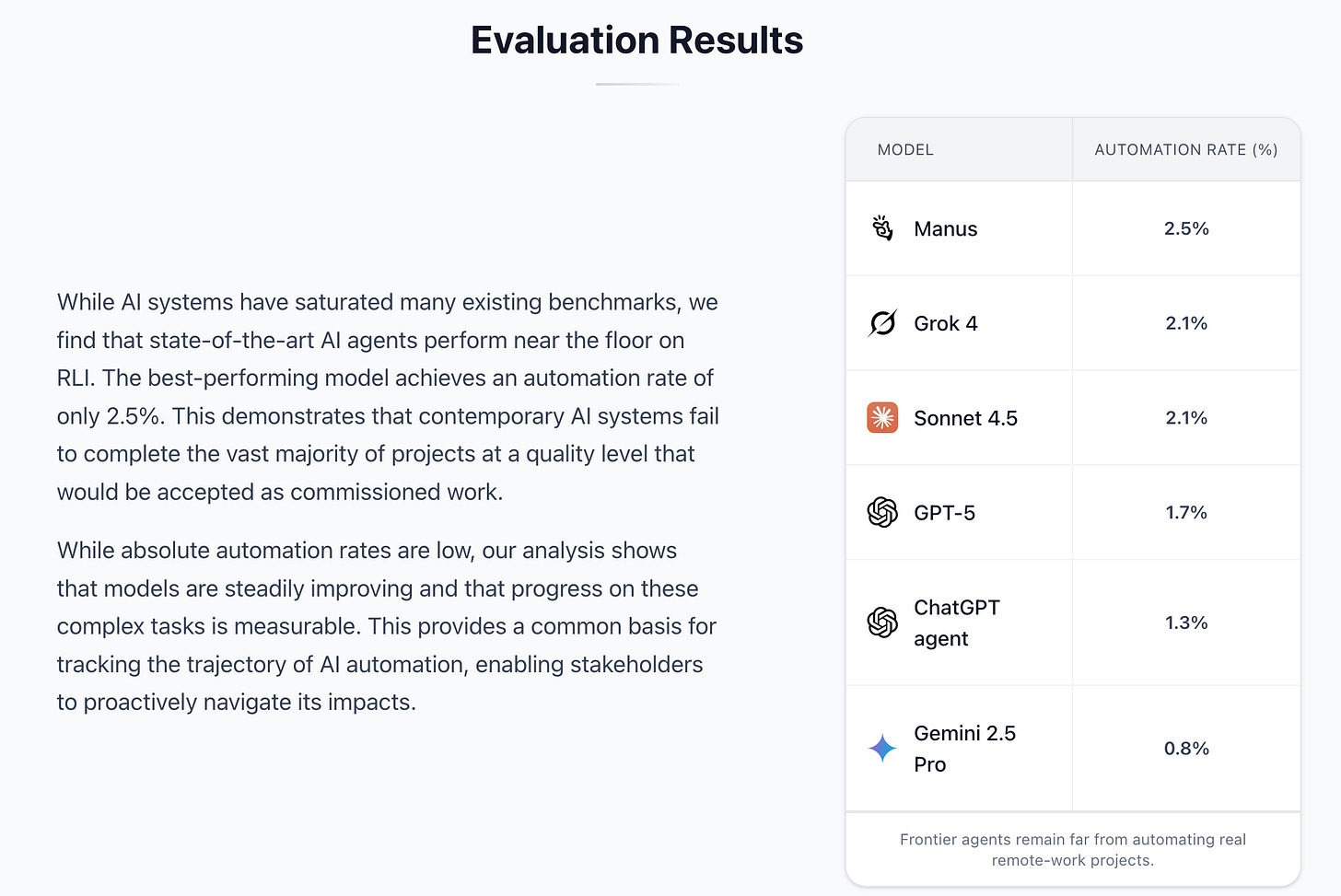

The main outcome of the study was to highlight that using existing models in their customer-facing applications against real-life workloads in Upwork did not show very promising performance:

Still, Manus actually benchmarked better than using the models directly in their native interfaces. It would've been interesting to see in what direction they would take their product after what's been clearly an outstanding launch year for them.

With WSJ reporting $2B for the acquisition (the estimated valuation for their next round), the deal is mostly interesting because of its buyer, Meta. As the company continues to struggle to deliver compelling AI products, while holding the best distribution opportunity after Google, it appears that Zuckerberg has decided to push on the application layer as well, rather than limiting the team to improving on the model training side. Still, Manus was built on top of a frontier model and was paying current API prices in order to run its operations.

Offering the same capability and subsidizing it for free to users would be a great boost for Anthropic, but makes no sense with the current investor sentiment towards Meta. The obvious change here would be if Meta can deliver a frontier model next year that would be able to keep Manus running efficiently and then scale this back to its users in a similar way that Google does currently (with Gemini and office products being available for free, but with significant rate limits that require paid subscriptions).

A lot of the coverage around the acquisition also focuses on the Chinese origins of Manus AI, but this is a rather overplayed story at a time when more than 50% of the top AI researchers in the industry come from China. The team behind Manus AI clearly aimed at competing in the western market and delivered a product suitable for its audience, which is also what attracted Benchmark into investing.

Both acquisitions are long term plays, but ambitious ones and done quickly. This is how things move today in the world of cloud infrastructure software and AI.

It seems as though Nvidia is moving away from OpenAI …